iTEC is a contest dedicated to pupils and students with an interest in IT. This was its 13th edition; over 200 participants showed up. It consists of a couple of short (~6 hour) challenges and 4 hackathons (web, mobile, game dev and embedded).

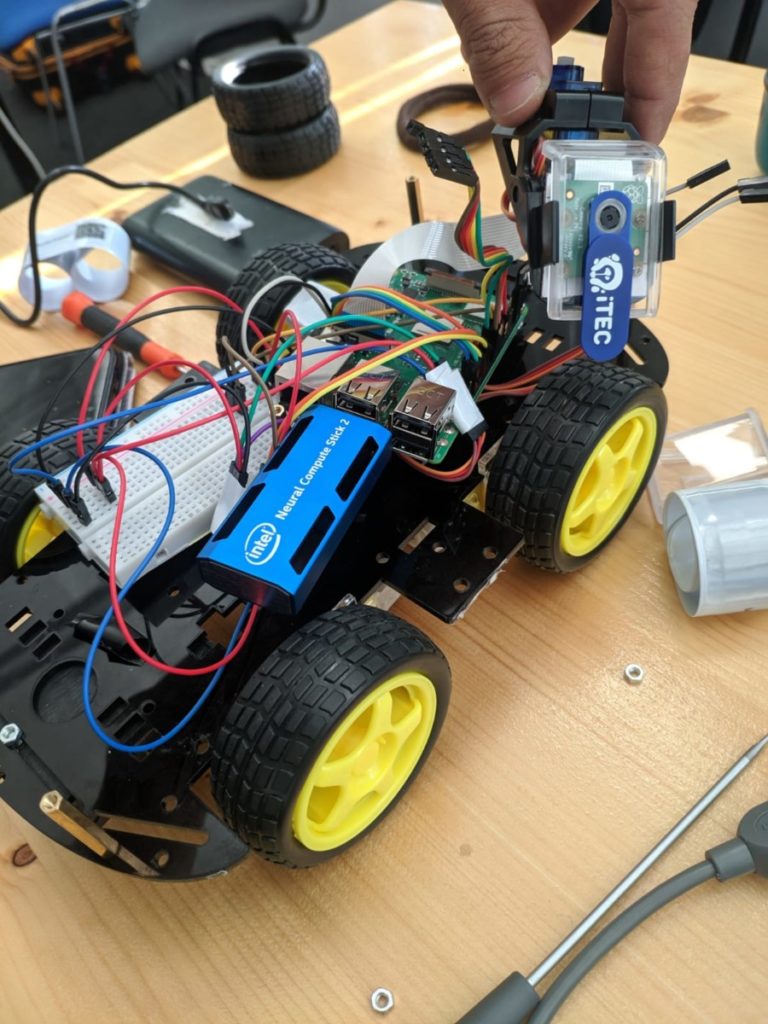

Together with Arin we took part in the embedded hackathon. We received:

- 1 x 4WD robotics kit, similar to this one

- 1 x Raspberry Pi 3

- 1 x Intel NCS2

- 1 x Raspberry Pi Camera

- assorted accessories like H bridges, level converters, battery holders and screws

Our idea

We wanted to build a rover that ran different style transfer Generative Adversarial networks on the NCS2. It would be remote controlled, with controls for rover movement and camera movement.

This will allow people to explore the real world through the eyes of Van Gogh, or an acid tripping person. And for those of you thinking we could’ve done it with a phone and a cloud computer, well, we could’ve but GLaDOS wanted to live.

The hard how behind it

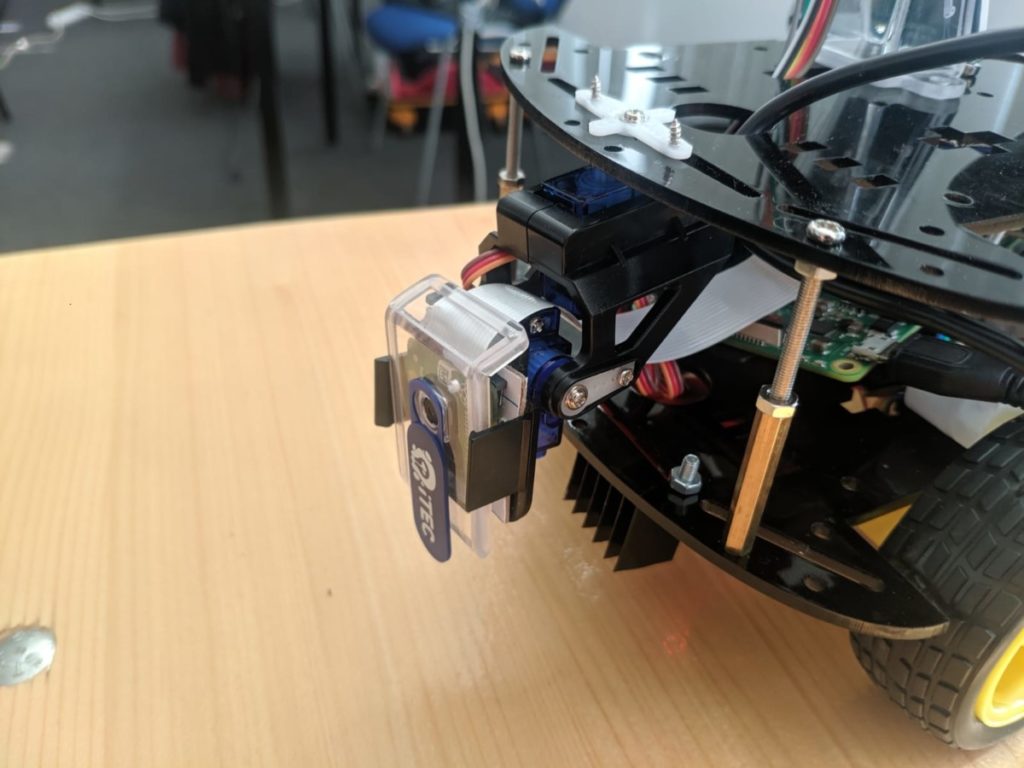

For the camera we used a 2 axis mount with SG 90 servos.

We mounted it upside down because it looked cool, and it kind of resembled GLaDOS. We had some issues with the servo driver, and ended up switching to the pigpio library instead of the standard RPi.GPIO and it worked out pretty well.

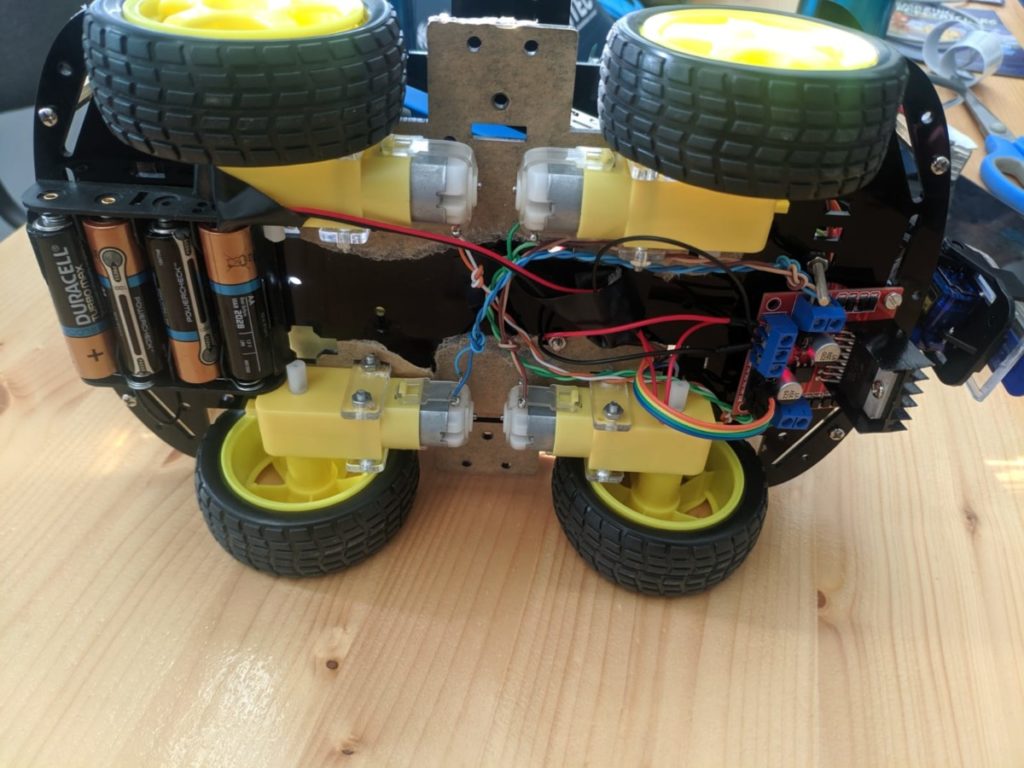

The engines were wired together in 2 parallel groups (left and right) and used an H bridge powered by 4 AA batteries (later 8 AA batteries in parallel) to control them.

The brain of the whole machine is a Raspberry Pi 3, which controls the 4 motors and 2 camera servos through 3.3 to 5 bidirectional logic level converters. Inference was performed on the Intel NCS 2.

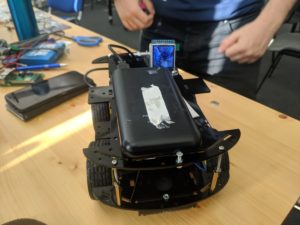

To top it off we added a small 128 x 128 TFT Display and a 10Ah power bank to power the Rasberry.

The soft how behind it

In true hackathon style we ran 3 servers on the Raspberry Pi.

One that ran the inference, created the video stream and provided a route allowing the client to request a model change.

One was a Flask REST API that allowed the client to control the motors and the camera servos.

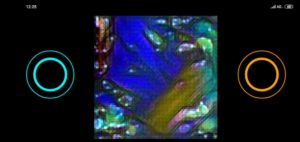

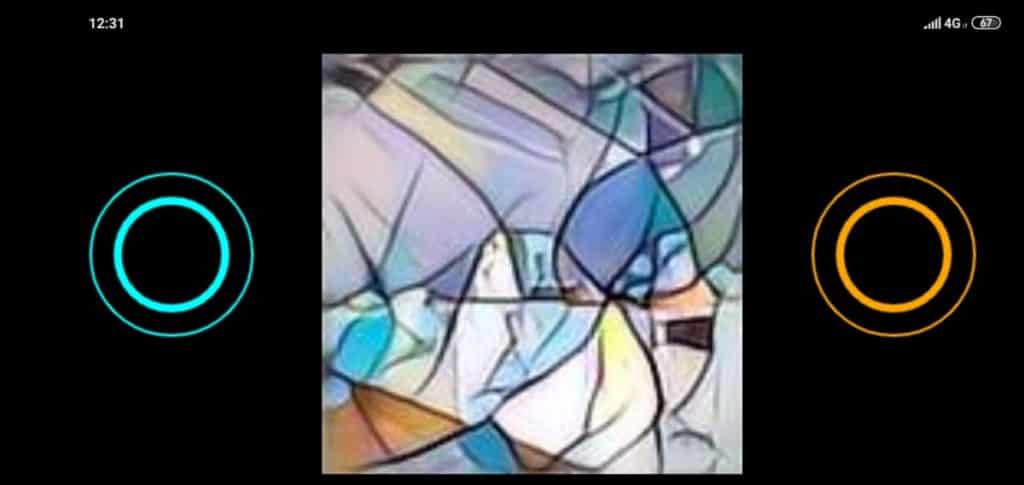

And finally, a small Python 3 HTTP server that provided the frontend. Although initially we planned on using it from the laptop, I decided to turn it into a mobile ‘app’ with 2 joysticks.

The cyan joystick controls the motor, and the orange one controls the camera.

We found a number of network styles pretrained, and we also trained one of our own based on this image:

The results

We managed to win second place using our little rover. A good hackathon indeed.